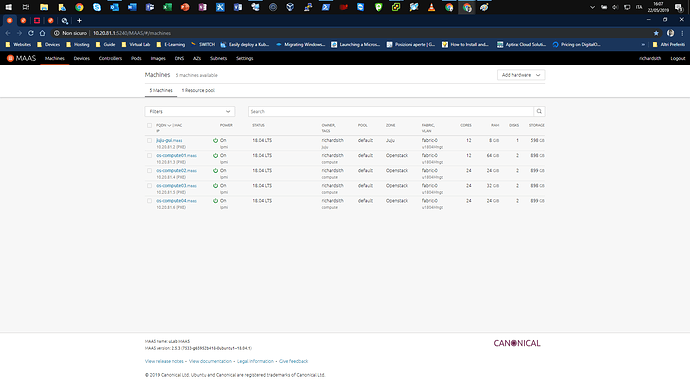

I’m deployed Kubernetes Core on my private cloud (Openstack lab) to study how K8S works, but I can’t view its gui. Of following I show you my Lab:

IP plan:

Network: 10.20.81.0/24

Maas: 10.20.81.1

Juju: 10.20.81.2

Openstack: 10.20.81.21-24

External Gateway: 10.20.81.254

Private Network: 10.1.0.0/24

Private Gateway: 10.1.0.1

Private DHCP service: 10.1.0.10

Network topology:

+-------------+

Firewall

10.20.81.254

+-------------+

|

+-------------------------------------------------------------+

Switch

+-------------------------------------------------------------+

| | || | | |

+--------------+ +-------------+ +------------------+

|Maas+Juju |Juju Gui |Openstack

|10.20.81.1 |10.20.81.2 |10.20.81.21-24

+--------------+ +-------------+ +------------------+

|

+----------------------------------------+

Private Subnet-1 Public Subnet-2

10.1.0.0/24 10.20.81.0/24

+---+--+--+ +---+------+

| |

| |

+-+-+ +-+-+

| | | |

|Juju| |K8s|

|Gui | | |

| | | |

+----+ +---+

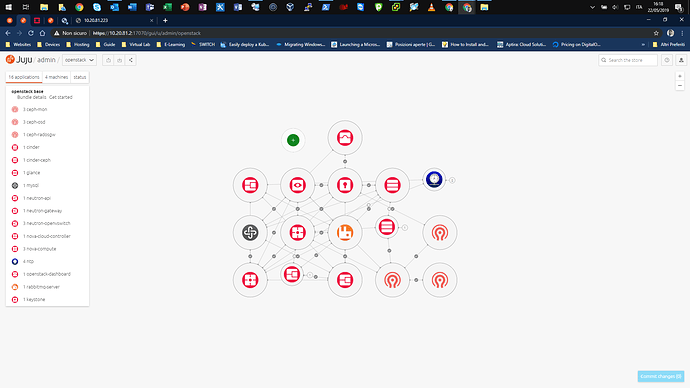

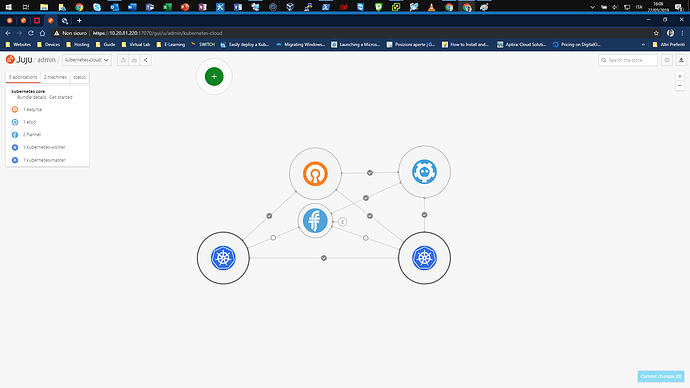

The task of its deploy from JUJU to OPENSTACK works right, here is its own status

$:juju status

Model Controller Cloud/Region Version SLA Timestamp

kubernetes-cloud openstack-controller openstack-cloud/RegionOne 2.5.4 unsupported 08:24:06Z

App Version Status Scale Charm Store Rev OS Notes

easyrsa 3.0.1 active 1 easyrsa jujucharms 235 ubuntu

etcd 3.2.10 active 1 etcd jujucharms 415 ubuntu

flannel 0.10.0 active 2 flannel jujucharms 404 ubuntu

kubernetes-master 1.14.1 active 1 kubernetes-master jujucharms 654 ubuntu exposed

kubernetes-worker 1.14.1 active 1 kubernetes-worker jujucharms 519 ubuntu exposed

Unit Workload Agent Machine Public address Ports Message

easyrsa/0* active idle 1/lxd/0 10.27.75.36 Certificate Authority connected.

etcd/0* active idle 1 10.1.0.13 2379/tcp Healthy with 1 known peer

kubernetes-master/0* active idle 1 10.1.0.13 6443/tcp Kubernetes master running.

flannel/1 active idle 10.1.0.13 Flannel subnet 10.1.80.1/24

kubernetes-worker/0* active idle 0 10.1.0.15 80/tcp,443/tcp Kubernetes worker running.

flannel/0* active idle 10.1.0.15 Flannel subnet 10.1.19.1/24

Machine State DNS Inst id Series AZ Message

0 started 10.1.0.15 c68e8cc3-e85f-4c90-b5d3-0119938f893e bionic nova ACTIVE

1 started 10.1.0.13 8f13da27-9ea3-4464-9411-e2875d131c51 bionic nova ACTIVE

1/lxd/0 started 10.27.75.36 juju-1284d9-1-lxd-0 bionic nova Container started

then

$:juju ssh kubernetes-master/0

ubuntu@juju-1284d9-kubernetes-cloud-0:~$ kubectl cluster-info

Kubernetes master is running at https://10.1.0.13:6443

Heapster is running at https://10.1.0.13:6443/api/v1/namespaces/kube-system/services/heapster/proxy

CoreDNS is running at https://10.1.0.13:6443/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy

Metrics-server is running at https://10.1.0.13:6443/api/v1/namespaces/kube-system/services/https:metrics-server:/proxy

Grafana is running at https://10.1.0.13:6443/api/v1/namespaces/kube-system/services/monitoring-grafana/proxy

InfluxDB is running at https://10.1.0.13:6443/api/v1/namespaces/kube-system/services/monitoring-influxdb:http/proxy

To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'.

ubuntu@juju-1284d9-kubernetes-cloud-0:~$

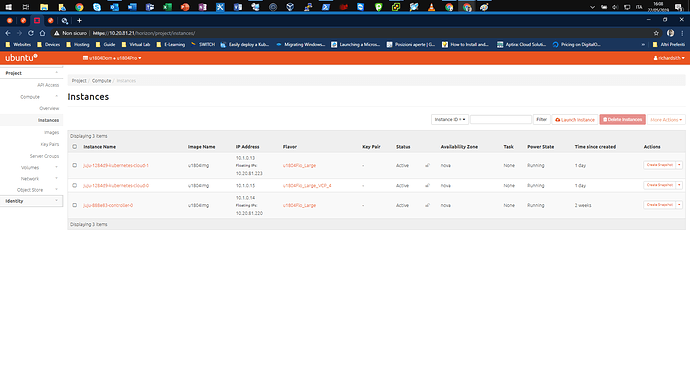

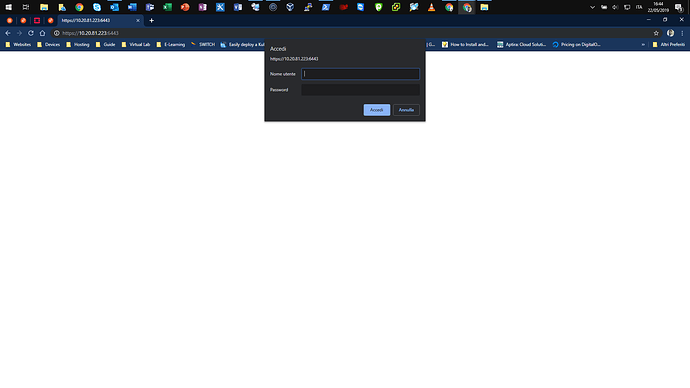

Then I’ve assigned to the instance kubernetes-master/0 a floating IP but if I try to open that on my browser the result is

404 Not Found

nginx/1.15.8

As suggested of its guide

kubectl proxy

By default, this establishes a proxy running on your local machine and the

kubernetes-master unit. To reach the Kubernetes dashboard, visit

http://localhost:8001/api/v1/namespaces/kube-system/services/https:kubernetes-dashboard:/proxy/

but in my case I 'd view its own dashboard using floating IP assigned. Someone can help me? thanks