I’m really new to JuJu and containers and Kubernetes, so please bear with me.

I am using my own MAAS cloud with plenty of resources. With JuJu, I deploy charmed Kubernetes. I have my own private Docker registry which I am trying to pull my test containers from.

Some details:

ubuntu@golang-project:~$ juju --version

2.6.6-bionic-amd64

ubuntu@golang-project:~$ kubectl version

Client Version: version.Info{Major:"1", Minor:"15", GitVersion:"v1.15.2", GitCommit:"f6278300bebbb750328ac16ee6dd3aa7d3549568", GitTreeState:"clean", BuildDate:"2019-08-05T17:09:13Z", GoVersion:"go1.12.7", Compiler:"gc", Platform:"linux/amd64"}

Server Version: version.Info{Major:"1", Minor:"15", GitVersion:"v1.15.2", GitCommit:"f6278300bebbb750328ac16ee6dd3aa7d3549568", GitTreeState:"clean", BuildDate:"2019-08-05T17:06:39Z", GoVersion:"go1.12.7", Compiler:"gc", Platform:"linux/amd64"}

Create cluster:

ubuntu@golang-project:~$ juju add-model k8s

ubuntu@golang-project:~$ juju deploy charmed-kubernetes --model k8s

ubuntu@golang-project:~$ juju scp kubernetes-master/0:config ~/.kube/config

Everything looks good:

ubuntu@golang-project:~$ juju status

Model Controller Cloud/Region Version SLA Timestamp

k8s maas-cloud maas-cloud 2.6.5 unsupported 18:13:31Z

App Version Status Scale Charm Store Rev OS Notes

containerd active 5 containerd jujucharms 2 ubuntu

easyrsa 3.0.1 active 1 easyrsa jujucharms 254 ubuntu

etcd 3.2.10 active 3 etcd jujucharms 434 ubuntu

flannel 0.10.0 active 5 flannel jujucharms 425 ubuntu

kubeapi-load-balancer 1.14.0 active 1 kubeapi-load-balancer jujucharms 649 ubuntu exposed

kubernetes-master 1.15.2 active 2 kubernetes-master jujucharms 700 ubuntu

kubernetes-worker 1.15.2 active 3 kubernetes-worker jujucharms 552 ubuntu exposed

Unit Workload Agent Machine Public address Ports Message

easyrsa/0* active idle 0 192.168.1.32 Certificate Authority connected.

etcd/0* active idle 1 192.168.1.33 2379/tcp Healthy with 3 known peers

etcd/1 active idle 2 192.168.1.34 2379/tcp Healthy with 3 known peers

etcd/2 active idle 3 192.168.1.48 2379/tcp Healthy with 3 known peers

kubeapi-load-balancer/0* active idle 4 192.168.1.35 443/tcp Loadbalancer ready.

kubernetes-master/0 active idle 5 192.168.1.37 6443/tcp Kubernetes master running.

containerd/4 active idle 192.168.1.37 Container runtime available.

flannel/4 active idle 192.168.1.37 Flannel subnet 10.1.75.1/24

kubernetes-master/1* active idle 6 192.168.1.46 6443/tcp Kubernetes master running.

containerd/3 active idle 192.168.1.46 Container runtime available.

flannel/3 active idle 192.168.1.46 Flannel subnet 10.1.78.1/24

kubernetes-worker/0 active idle 7 192.168.1.45 80/tcp,443/tcp Kubernetes worker running.

containerd/0 active idle 192.168.1.45 Container runtime available.

flannel/0 active idle 192.168.1.45 Flannel subnet 10.1.2.1/24

kubernetes-worker/1* active idle 8 192.168.1.49 80/tcp,443/tcp Kubernetes worker running.

containerd/2* active idle 192.168.1.49 Container runtime available.

flannel/2* active idle 192.168.1.49 Flannel subnet 10.1.40.1/24

kubernetes-worker/2 active idle 9 192.168.1.47 80/tcp,443/tcp Kubernetes worker running.

containerd/1 active idle 192.168.1.47 Container runtime available.

flannel/1 active idle 192.168.1.47 Flannel subnet 10.1.85.1/24

Machine State DNS Inst id Series AZ Message

0 started 192.168.1.32 up-bee bionic default Deployed

1 started 192.168.1.33 master-iguana bionic default Deployed

2 started 192.168.1.34 open-liger bionic default Deployed

3 started 192.168.1.48 handy-goblin bionic default Deployed

4 started 192.168.1.35 normal-goat bionic default Deployed

5 started 192.168.1.37 heroic-civet bionic default Deployed

6 started 192.168.1.46 driven-ape bionic default Deployed

7 started 192.168.1.45 superb-duck bionic default Deployed

8 started 192.168.1.49 vital-caiman bionic default Deployed

9 started 192.168.1.47 fond-chow bionic default Deployed

I compiled my simple Golang app, and build it into an alpine container. I then push it to my Docker registry. Mind you, it is insecure, no HTTPS

ubuntu@golang-project:~$ docker push 192.168.1.44:5000/goapp:latest

The push refers to repository [192.168.1.44:5000/goapp]

8e5d795c7ed6: Pushed

latest: digest: sha256:ddb2459d8b5deb384f00852ad93038ea52430996a5ef565cbc3002b864380746 size: 528

Tell JuJu about custom registry (docs)

ubuntu@golang-project:~$ juju config containerd custom_registries='[{"url": "http://192.168.1.44:5000", "username": "admin", "password": "password01"}]'`

Create a pod yaml -> .kube/goapp.yaml

apiVersion: v1

kind: Pod

metadata:

name: gopod

spec:

containers:

- name: goapp

image: 192.168.1.44:5000/goapp:latest

imagePullPolicy: Always

Send it!

ubuntu@golang-project:~$ kubectl apply -f .kube/goapp.yaml

Watch it not work…

ubuntu@golang-project:~$ kubectl describe pod gopod

Name: gopod

Namespace: default

Node: superb-duck/192.168.1.45

Start Time: Thu, 08 Aug 2019 17:22:30 +0000

Labels: <none>

Annotations: kubectl.kubernetes.io/last-applied-configuration:

{"apiVersion":"v1","kind":"Pod","metadata":{"annotations":{},"name":"gopod","namespace":"default"},"spec":{"containers":[{"image":"192.168...

Status: Pending

IP: 10.1.2.76

Containers:

goapp:

Container ID:

Image: 192.168.1.44:5000/goapp:latest

Image ID:

Port: <none>

Host Port: <none>

State: Waiting

Reason: ImagePullBackOff

Ready: False

Restart Count: 0

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from default-token-87vvj (ro)

Conditions:

Type Status

Initialized True

Ready False

ContainersReady False

PodScheduled True

Volumes:

default-token-87vvj:

Type: Secret (a volume populated by a Secret)

SecretName: default-token-87vvj

Optional: false

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute for 300s

node.kubernetes.io/unreachable:NoExecute for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 45m default-scheduler Successfully assigned default/gopod to superb-duck

Normal Pulling 44m (x4 over 45m) kubelet, superb-duck Pulling image "192.168.1.44:5000/goapp:latest"

Warning Failed 44m (x4 over 45m) kubelet, superb-duck Failed to pull image "192.168.1.44:5000/goapp:latest": rpc error: code = Unknown desc = failed to resolve image "192.168.1.44:5000/goapp:latest": no available registry endpoint: failed to do request: Head https://192.168.1.44:5000/v2/goapp/manifests/latest: http: server gave HTTP response to HTTPS client

Warning Failed 44m (x4 over 45m) kubelet, superb-duck Error: ErrImagePull

Warning Failed 10m (x152 over 45m) kubelet, superb-duck Error: ImagePullBackOff

Normal BackOff 45s (x196 over 45m) kubelet, superb-duck Back-off pulling image "192.168.1.44:5000/goapp:latest"

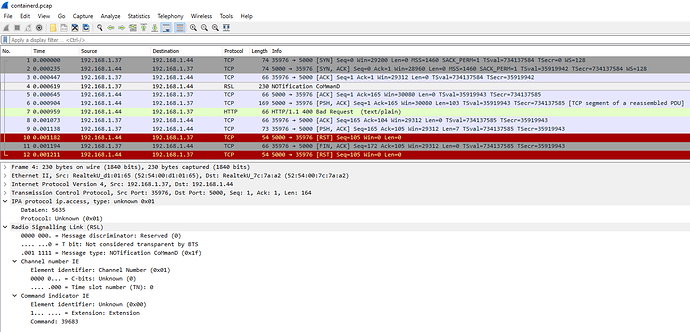

Manually use containerd tool to test container pull on Kubernetes node

ubuntu@superb-duck:~$ sudo ctr image pull -k --plain-http -user admin 192.168.1.44:5000/goapp:latest

Password:

192.168.1.44:5000/goapp:latest: resolved |++++++++++++++++++++++++++++++++++++++|

manifest-sha256:ddb2459d8b5deb384f00852ad93038ea52430996a5ef565cbc3002b864380746: done |++++++++++++++++++++++++++++++++++++++|

layer-sha256:231e98b468c142d1f9bbec3b5a09df2d8bcae0cfe8ea4323fd2dc72ae699ae1d: done |++++++++++++++++++++++++++++++++++++++|

config-sha256:385f52d7a9c13c560007d881cf4ac040cb07a350438f7d4bcea9e32191ebdefc: done |++++++++++++++++++++++++++++++++++++++|

elapsed: 0.2 s total: 1.2 Mi (5.9 MiB/s)

unpacking linux/amd64 sha256:ddb2459d8b5deb384f00852ad93038ea52430996a5ef565cbc3002b864380746...

done

I am at a loss now. I apologize ahead of time, since I think that my lack of experience is probably hampering my ability to really dig deeper. My assumption is that the additional containerd config custom_registries isn’t being respected or maybe HTTPS is being forced and JuJu isn’t accounting for the possibility of a plain text registry.

Can anyone assist?