Hi folks

This relates to my other WIP post but its blocked me up for 24 hours and hosed a working K8S cluster so I figure its worth splitting out.

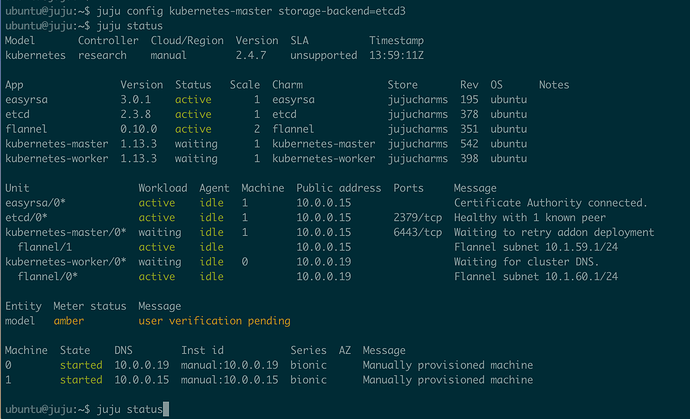

I’ve got 2 servers, one for K8S master and one K8S worker.

I’ve wired up manual CDK installs before but when I charm upgraded my working one this also happened:

On the Master the API server fails to start, it tries to query:

http://127.0.0.1:8080/api/v1/namespaces/kube-system/endpoints/kube-controller-manager?

but just timeouts and throws a crap load of warnings.

On the worker:

Feb 11 12:52:03 ubuntu kube-proxy.daemon[12420]: cat: /var/snap/kube-proxy/722/args: No such file or directory

Feb 11 12:52:03 ubuntu kube-proxy.daemon[12420]: W0211 12:52:03.493741 12420 server.go:194] WARNING: all flags other than --config, --write-config-to, and --cleanup are deprecated. Please begin using a config file ASAP.

Feb 11 12:52:03 ubuntu kube-proxy.daemon[12420]: I0211 12:52:03.500258 12420 server.go:429] Neither kubeconfig file nor master URL was specified. Falling back to in-cluster config.

Feb 11 12:52:03 ubuntu kube-proxy.daemon[12420]: F0211 12:52:03.500271 12420 server.go:377] unable to load in-cluster configuration, KUBERNETES_SERVICE_HOST and KUBERNETES_SERVICE_PORT must be defined

and fails to start.

I’m not sure which is the problem, but if I look at a working CDK I deployed into AWS, I see

/root/cdk/kubelet/config.yaml

which doesn’t exist on mine, and also in the error the args file/folder certainly doesn’t exist in the snap, but i’m not sure what creates it.

On the master the syslog also says:

Feb 11 12:52:19 ubuntu kube-apiserver.daemon[5177]: Error: error creating self-signed certificates: mkdir /var/run/kubernetes: permission denied

right back at the top but that folder doesn’t exist on the working cluster either, so i’m not convinced its a disaster